Introduction

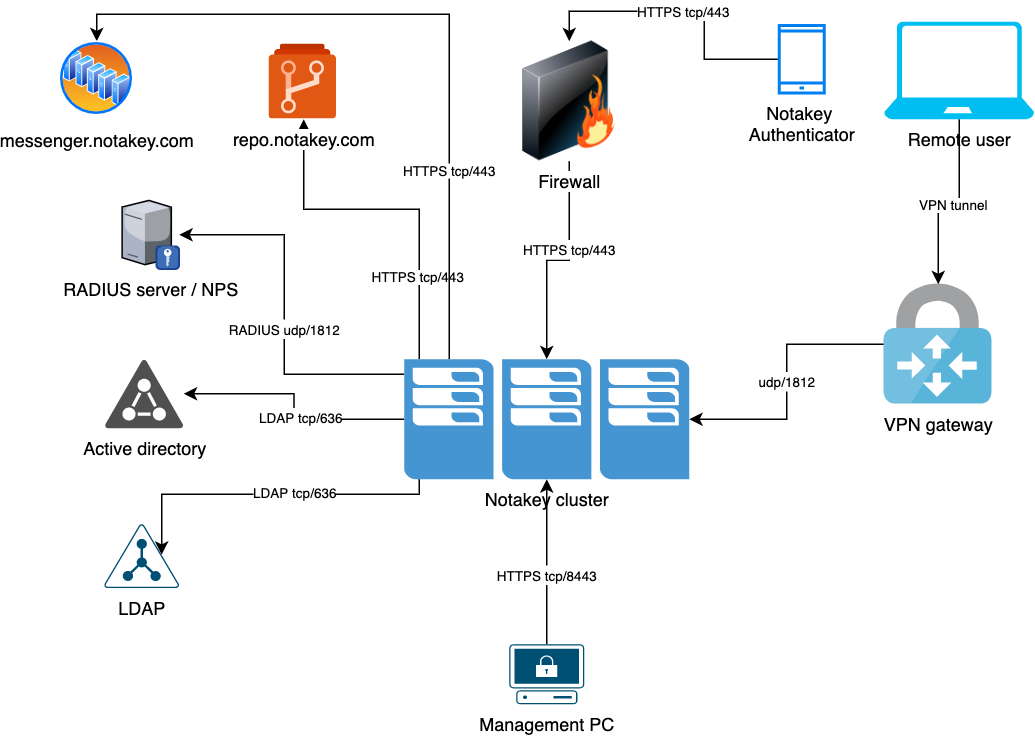

Notakey Authentication Appliance (NtkAA) is a RancherOS Linux-based virtual machine, which contains the core Notakey API software, a RADIUS proxy for transparently integrating the Notakey API into existing RADIUS-based workflows, a Single Sign-On (SSO) web service for SAML and OAuth and other standard authentication support and various plugins for integration with cloud and local services.

NtkAA supports various redundancy and load balancing schemes and fully supports zero downtime rolling updates that can be requested with single command.

Scaling is supported by underlying cluster architecture allowing additional member joining with no downtime and advanced configuration of role based replication. Groups of nodes can be delegated to perform specific tasks like mobile API backend, SSO frontend and others.

Product Versions in Appliance

This user manual’s version number will match the corresponding appliance’s version number.

Each appliance contains the following software:

- Notakey Authentication Server (NtkAs) (version: 3.1.1)

- Notakey Authentication Proxy (NtkAp) (version: 1.0.5)

- VRRP agent (VRRPa) (version: 2.1.5-1)

- Notakey SSO (NtkSSO) (version: 3.1.7)

The appliance version number, or this user manual’s version number, will not typically match the version numbers of either NtkAs or NtkAp.

Technical Summary

The appliance is a self-contained environment, with no (mandatory) external dependencies, where the Notakey software is installed during setup wizard. To avoid any potential software conflicts, the VM used for Notakey software installation should be single-purpose.

It is stripped down as much as possible - only the bare minimum system components, which are required for the software to operate, are left on the system. This keeps the potential attack surface to a minimum.

Appliance can be be provisioned with limited special purpose user for daily management tasks during install time. This user will have all necessary management privilledges for provided services, but no system service level access.

Appliance Licences

Each appliance cluster has an associated licence-type and an associated version.

Appliances with the same version, but different licences, support the same features. Feature availability is determined by the licence version. Throughout this document, features whose availability depends on the licence type, will be specially annotated.

| Licence Type | Description |

|---|---|

| Express | Licence for deployments with under 2000 users. |

| Enterprise | Licence for deployments with over 10 000 users. |

Express licence

Per user licences are issued when user onboards a mobile device and remains locked to this user until onboarded device is removed (offboarded). Contact your sales representative for more details.

Enterprise licence

User licence usage is calculated based on a fixed day rolling window, considering only users that have any authentication or onboarding activity in this window. Contact your sales representative for more details.

System Requirements

Infrastructure Requirements

- Local network for appliance virtual machines (VMs)

Notakey Appliance VM requires at least one internal network interface with single internal IP address and public internet access during setup. If using multiple VMs this should be a dedicated network for Notakey services containing only Notakey Appliance VMs and router.

- Dedicated public IP address

A single public IP address is required to be able to set up port forwarding and issue a DNS name for service. This does not apply to load balancer or cloud deployment scenarios.

- Ingress controller

In most basic use case this will be your local network router with firewall and destination network address translation (dNAT) capability. You will need to forward port tcp/443 (https) of public IP address to appliance VM internal IP address. You will also need to forward port tcp/80 (http) if using automatic Let’s Encrypt TLS certificates for domain name validation to succeed. Larger scale deplyments can use external load balancers to be able to scale service as required. It is also possible to deploy Notakey services to your Kubernetes cloud infrastructure, please contact our support for more details.

- Dedicated DNS name for service

DNS name is required to be able to assign and validate TLS certificate. The Notakey Authenticator application requires TLS for operation.

- (Optional) TLS certificate from trusted CA

If not using automatic Let’s Encrypt TLS certificate, you will need to provide TLS key and certificate file (PEM base64 encoded with certification chain) for the dedicated DNS name used for Authentication Server service.

Hypervisor Compatibility

The following table is a appliance version/hypervisor compatibility matrix:

| Appliance Version | Supported Virtualization Environments |

|---|---|

| <= 5.* | Hyper-V (Windows Server 2012 R2 and newer) |

| VMware ESXi 5.5 (and newer) | |

| Oracle Virtualbox 5.2 (and newer) |

VMware Information

The following table contains information about different appliance versions, as it pertains to VMware ESXi.

| Appliance Version | Virtual Hardware Version |

|---|---|

| <= 3.* | vmx-09 |

Hardware Requirements

The Notakey service is typically CPU bound. Most operations are reading from an in-memory store.

Minimum Requirements (Less Than 1000 Users)

For a deployment with less than 1000 users, the suggested requirements are:

| Resource | Value |

|---|---|

| RAM | 4 GB |

| vCPU | 2 |

| CPU type | 2.3 GHz Intel Xeon® E5-2686 v4 (Broadwell) or newer |

| Storage | 32 GB |

Suggested Requirements

For a typical deployment, with up to 300 000 users, the suggested hardware requirements are:

| Resource | Value |

|---|---|

| RAM | 8 GB |

| vCPU | 8 |

| CPU type | 2.3 GHz Intel Xeon® E5-2686 v4 (Broadwell) or newer |

| Storage | 256 GB |

Availability considerations

In case of 3-node deployments, if 1 node fails its healthcheck (or becomes unavailable), traffic can be routed to the other route.

NOTE: more than half nodes are required to be operational for full service. If more than half nodes are not operational, then the remaining nodes will operate in a limited capacity.

It will allow:

- new authentication requests for existing users based on previously issued API access tokens

It will not allow:

onboarding new users

changing onboarding requirements

creating new applications

editing existing applications or users

issuing new API access tokens

In addition, the partially operational nodes will not log authorization requests

in the web user interface. It will still be possible, however, to log these requests

to rsyslog (if so configured).

General failure tolerance depends on the total number of nodes. To maintain data integrity, a quorum of servers must be available, which can be calculated with the formula Cquorum = FLOOR(Cservers / 2) + 1.

The failure tolerance can be calculated by FT = Cservers - Cquorum.

In other words - more than half of the total available servers must be available and operational.

| Total servers | Quorum size | Failure tolerance |

|---|---|---|

| 1 | 1 | 0 |

| 2 | 2 | 0 |

| 3 | 2 | 1 |

| 4 | 3 | 1 |

| 5 | 3 | 2 |

| 6 | 4 | 2 |

| 7 | 4 | 3 |

| 8 | 5 | 3 |

| 9 | 5 | 4 |

| 10 | 6 | 4 |

Network Connectivity

Generally only port required for normal operation is TCP/443, if TLS is terminated on appliance. A configuration with external load balancers is available too, in this case port numbers are provided depending on service. See individual service documentation below for more information.

Appliance connects to the outside word via HTTPS and requires several hosts available normal operation. Update repository and push notification service can be also run on premise, if required. Contact your support representative for more details.

Appliance Installation

Appliance install is performed by installing base RancerOS container Linux. Any version since 1.1 should be ok, but we recommend installing latest release version. After OS is installed to disk and network configured, Notakey services can be installed by notakey/cfg container image.

Download latest RancherOS installation ISO from https://github.com/rancher/os/releases.

Provision virtual machine according to your hardware requirements.

Boot virtual machine from ISO rancheros.iso.

Provision appliance with cloud-config (optional).

** Set temporary password for installer

sudo passwd rancher.** Copy deployment file to VM

scp cloud-config.yml rancher@myip:~Install Rancher OS to disk ( see documentation for more details ).

# With provisioning file

$ sudo ros install -c cloud-config.yml -d /dev/sda

# To allow local logins with rancher sudo user (this will allow local terminal login)

$ sudo ros install -d /dev/sda --append "rancher.password=mysecret"

INFO[0000] No install type specified...defaulting to generic

WARN[0000] Cloud-config not provided: you might need to provide cloud-config on boot with ssh_authorized_keys

Installing from rancher/os:v1.5.5

Continue [y/N]: y

INFO[0002] start !isoinstallerloaded

INFO[0002] trying to load /bootiso/rancheros/installer.tar.gz

69b8396f5d61: Loading layer [==================================================>] 11.89MB/11.89MB

cae31a9aae74: Loading layer [==================================================>] 1.645MB/1.645MB

78885fd6d98c: Loading layer [==================================================>] 1.536kB/1.536kB

51228f31b9ce: Loading layer [==================================================>] 2.56kB/2.56kB

d8162179e708: Loading layer [==================================================>] 2.56kB/2.56kB

3ee208751cd2: Loading layer [==================================================>] 3.072kB/3.072kB

Loaded image: rancher/os-installer:latest

INFO[0005] Loaded images from /bootiso/rancheros/installer.tar.gz

INFO[0005] starting installer container for rancher/os-installer:latest (new)

time="2020-04-14T19:07:05Z" level=warning msg="Cloud-config not provided: you might need to provide cloud-config on boot with ssh_authorized_keys"

Installing from rancher/os-installer:latest

mke2fs 1.45.2 (27-May-2019)

64-bit filesystem support is not enabled. The larger fields afforded by this feature enable full-strength checksumming. Pass -O 64bit to rectify.

Creating filesystem with 4193792 4k blocks and 4194304 inodes

Filesystem UUID: 409a9dd6-f91a-45f2-ab0a-772d52b1a390

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000

Allocating group tables: done

Writing inode tables: done

Creating journal (16384 blocks): done

Writing superblocks and filesystem accounting information: done

Continue with reboot [y/N]: y

INFO[0046] Rebooting

INFO[0047] Setting reboot timeout to 60 (rancher.shutdown_timeout set to 60)

.............^[ ] reboot:info: Setting reboot timeout to 60 (rancher.shutdown_timeout set to 60)

.=.[ ] reboot:info: Stopping /docker : 42f28ebab847

............O............[ ] reboot:info: Stopping /open-vm-tools : 4fbf05cf6dca

..;..[ ] reboot:info: Stopping /ntp : 7e613153f723

..?..[ ] reboot:info: Stopping /network : 89a0f9f74c14

.......A.......[ ] reboot:info: Stopping /udev : a85c5a659768

..>..[ ] reboot:info: Stopping /syslog : 3391d49d2508

..=..[ ] reboot:info: Stopping /acpid : 4cb434297562

...C..[ ] reboot:info: Stopping /system-cron : dbace052a6d0

..J...[ ] reboot:info: Console Stopping [/console] : 7761e93fa8bb

Log in with your ssh key or use previously configured password.

Configure networking or use DHCP (enabled by default).

$ sudo ros config set rancher.network.interfaces.eth0.address 172.68.1.100/24

$ sudo ros config set rancher.network.interfaces.eth0.gateway 172.68.1.1

$ sudo ros config set rancher.network.interfaces.eth0.mtu 1500

$ sudo ros config set rancher.network.interfaces.eth0.dhcp false

$ sudo ros config set rancher.network.dns.nameservers "['192.168.4.1','192.168.2.1']"

- Validate new configuration.

$ sudo ros c validate -i /var/lib/rancher/conf/cloud-config.yml

# No errors means config is ok

- Change to console that supports state persistence.

$ sudo ros console switch alpine

Switching consoles will

1. destroy the current console container

2. log you out

3. restart Docker

Continue [y/N]: y

Pulling console (docker.io/rancher/os-alpineconsole:v1.5.5)...

v1.5.5: Pulling from rancher/os-alpineconsole

9d48c3bd43c5: Pull complete

cbc3261af7c6: Pull complete

5d1a52b7b5d3: Pull complete

Digest: sha256:39de2347295a5115800d6d9d6d747a8c91d2b06d805af927497754a043072188

Status: Downloaded newer image for rancher/os-alpineconsole:v1.5.5

Relogin to appliance

Reboot to apply network configuration with

sudo reboot(if using static IP).Disable local password with

sudo ros config syslinuxand remove append part from configuration file (optional).Install appliance management and run installation and configuration wizard.

docker run -it \

--privileged \

--net=host \

-v /var/run/docker.sock:/var/run/docker.sock:rw \

-v /:/appliancehost \

notakey/cfg:latest install

Unable to find image 'notakey/cfg:latest' locally

latest: Pulling from notakey/cfg

c9b1b535fdd9: Pull complete

d1804e804407: Pull complete

Digest: sha256:8a9fb9f387c7f493a964cfad453924b6031935b737eab31ebaa8451c31df689f

Status: Downloaded newer image for notakey/cfg:latest

_______ __ __

\ \ _____/ |______ | | __ ____ ___.__.

/ | \ / _ \ __\__ \ | |/ // __ < | |

/ | ( <_> ) | / __ \| <\ ___/\___ |

\____|__ /\____/|__| (____ /__|_ \\___ > ____|

\/ \/ \/ \/\/

Welcome to the Notakey Authentication Appliance

installation wizard.

This wizard will install required utilities for appliance

management.

Do you wish to continue? (y/n): y

==> Detecting OS

Detected RancherOS version v1.5.5 from os image rancher/os:v1.5.5

==> Detecting console

==> Determining user

Assuming rancher

==> Creating directories

==> Installing management utilities

==> Installing default config

==> Installing ntk cli wrapper

==> Pulling the specified tag... skipping (--skip-pull detected)

==> Checking if tag exists locally... ok

==> Writing the wrapper... ok

==> Setting executable permission... ok

==> Installing coreutils...done

==> RancherOS configuration

==> Storing version information

==> Storing configuration

NOTE: Install can create user with limited privileges

that can be used for appliance daily management.

This user has only access to ntk prompt, docker and

few basic utilities.

Do you wish create limited user? (y/n): n

==> Configuring assets directory

Assets stored in /home/rancher/userlogos

Edit /opt/notakey/cfg/.env to adjust

==> Downloading docker images

==> The following package versions will be downloaded

downloading all latest packages

dashboard: dashboard:2.19.3

auth-proxy: authproxy:1.0.4

cli: cfg:5.0.0

consul: consul:1.5.2

traefik: traefik:1.7.21

vrrp: keepalived:2.0.20

htop: htop:latest

sso: sso-idp:2.4.17

==> Downloading from repo.notakey.com

downloading dashboard:2.19.3

downloading authproxy:1.0.4

downloading cfg:5.0.0

downloading consul:1.5.2

downloading traefik:1.7.21

downloading keepalived:2.0.20

downloading htop:latest

downloading sso-idp:2.4.17

==> Configuration update

update complete

Do you wish to run configuration wizard? (y/n): y

... wizard continues, see below for details

Appliance Configuration

Multiple user provisioning

If you wish to carry out administration tasks via CLI interface using different user contexts to avoid credential sharing you have two options:

all admins use rancher user context and log on using individual SSH public keys (this is the recommended RancherOS way)

create admin user for each individual user, each user then can setup individual SSH public key to log in.

Creating individual users

Run the following commands to add a new user with username admin1. Replace all instances of admin1 with username you wish to add.

sudo adduser -s /bin/bash admin1

sudo addgroup admin1 docker

Allow user sudo access to be able to add new admin users and make system service changes. Normally all daily operations can be

carried out with ntk wrapper command. Add user to sudo only if user needs to invoke ros manage RancherOS configuration. Remember to remove

rancher user password with sudo ros config syslinux, if using password during setup.

# To allow root level access, add user to sudo group

sudo addgroup admin1 sudo

# Add user to sudoers file and change permissions

sudo sh -c 'echo "admin1 ALL=(ALL) ALL" > /etc/sudoers.d/admin1 && chmod 0440 /etc/sudoers.d/admin1'

User then can register his public key as follows.

cat ~/.ssh/id_rsa.pub | ssh admin1@nodeip 'mkdir -p .ssh && chmod 0700 .ssh && cat >> .ssh/authorized_keys'

This command assumes you SSH public key is in ~/.ssh/id_rsa.pub. Replace username admin1 and nodeip according to your setup.

To remove user run sudo deluser admin1.

Assembling multi node cluster

Any licensing model allows cluster setup starting from two or more nodes. Nodes in cluster can be located in single virtual datacenter or can be deployed on multiple datacenters provided that there is a reliable communications link between nodes. If no L2 network cannot be provided between datacenters, advanced configuration is possible deploying a private routed network between nodes.

Configuration of first node

- Start with basic configuration wizard.

[ntkadmin@appserver-1/mydomain]$ ntk wizard

_______ __ __

\ \ _____/ |______ | | __ ____ ___.__.

/ | \ / _ \ __\__ \ | |/ // __ < | |

/ | ( <_> ) | / __ \| <\ ___/\___ |

\____|__ /\____/|__| (____ /__|_ \___ > ____|

\/ \/ \/ \/\/

Welcome to the Notakey Authentication Appliance

configuration wizard.

This wizard is meant to guide you through the initial

setup.

Advanced configuration is always available via the 'ntk'

command. Consult the user manual for more details.

==> Detecting network interfaces

==> Gathering information

NOTE: Datacenter / domain must be the same if assembling

local multi node cluster. Local cluster resides in

single LAN segment with unrestricted communication.

This value must contain only alphanumeric characters

and - with no other punctuation or spaces.

Enter the node hostname: appserver-1

NOTE: Datacenter / domain must be the same if assembling

local multi node cluster. Local cluster resides in

single LAN segment with unrestricted communication.

This value must contain only alphanumeric characters

and - with no other punctuation or spaces.

Enter the cluster datacenter name/local domain: mydomain

NOTE: You can specify below to join automatically an existing

cluster. Specifying y will start cluster locally and

attempt to join existing cluster by connecting to

specified IP address. Cluster service must be started on

remote host and must be in healthy state.

If unsure, say n, you will have a chance to do this later.

Are you joining existing cluster? (y/n) [n]: n

Enter the desired domain name (e.g. ntk.example.com): ntk.example.com

Detected interfaces: eth0

Enter interface you would like to configure: eth0

Use DHCP? (y/n): n

NOTE: This wizard does not support IPv6 IP settings

Consult the appliance user manual for more details on

using IPv6.

Enter the static IPv4 in CIDR notation (e.g. 10.0.1.2/24): 192.168.1.2/24

Enter the static IPv4 gateway (e.g. 10.0.1.1): 192.168.1.1

Enter the DNS server addresses (separate values with ,): 192.168.1.1

Use automated TLS (Lets Encrypt?) (y/n): y

Enter e-mail address to use for requesting TLS certificate: helpdesk@example.com

==> Please review the entered information

Network interface: eth0

Use DHCP: n

Static IPv4: 192.168.1.2/24

Static gateway: 192.168.1.1

DNS servers: 192.168.1.1

External domain: ntk.example.com

Use Lets Encrypt (automatic TLS): y

E-mail for Lets Encrypt: helpdesk@example.com

Are these values OK? (y/n): y

==> Applying configuration

acme... ok

network settings... ok

basic settings... ok

- Configure to run management on separate port, if required. Application accelerator (caching) has to be disabled if using management port.

[ntkadmin@appserver-1/mydomain]$ ntk cfg setc nas.mgmt_port 8443

[ntkadmin@appserver-1/mydomain]$ ntk cfg setc :nas.caching 0

- Apply settings by restarting appliance.

[ntkadmin@appserver-1/mydomain]$ ntk sys reboot

- Start application and datastore in single node mode.

[ntkadmin@appserver-1/mydomain]$ ntk as start

==> Validating Notakey Authentication Server configuration

Checking security settings

Checking virtual network configuration

required: traefik-net

network exists

Checking software images

required: notakey/dashboard:2.5.0

Checking host

==> Validating Notakey Authentication Server cluster configuration

Checking node name

Checking cluster size

Checking if this node is cluster root... yes (root)

Checking advertise IP

Checking data center name

Checking TLS

using Lets Encrypt certificates

IMPORTANT: make sure this instance is publicly accessible via ntk.example.com

=> Starting NAS data store

client address: 172.17.0.1

cluster advertise address: 192.168.1.2

cluster size (minimum): 1

is cluster root: 1

started bebf41523ad60c6a222ec5b34be7bb64fd94751a95392d188c9eaa38320fc732

=> Starting new NAS instance

net: traefik-net

image: notakey/dashboard:2.5.0

cluster.image: consul:0.7.5

host: naa.4.0.0.notakey.com

cluster backend: http://172.17.0.1:8500/main-dashboard

loglevel: info

env: production

started 4de38ddc53a013445ab2b2c3c4c33537af26e4e0e44f935c2bd1e000bec6df04

=> Starting the reverse proxy

ACME status: on

ACME e-mail: admin@mycompany.com

started 49fcfdada52f3d5495ae1e03b517c90578ed3dd768ee154cdf72357c898d5f7b

Subsequent node configuration

- As on first node, start with basic configuration wizard.

[ntkadmin@appserver-2/mydomain]$ ntk wizard

_______ __ __

\ \ _____/ |______ | | __ ____ ___.__.

/ | \ / _ \ __\__ \ | |/ // __ < | |

/ | ( <_> ) | / __ \| <\ ___/\___ |

\____|__ /\____/|__| (____ /__|_ \___ > ____|

\/ \/ \/ \/\/

Welcome to the Notakey Authentication Appliance

configuration wizard.

This wizard is meant to guide you through the initial

setup.

Advanced configuration is always available via the 'ntk'

command. Consult the user manual for more details.

==> Detecting network interfaces

==> Gathering information

NOTE: Node hostname must be unique within cluster and

must contain only alphanumeric characters and -

with no other punctuation or spaces.

Enter the node hostname []: appserver-2

NOTE: Datacenter / domain must be the same if assembling

local multi node cluster. Local cluster resides in

single LAN segment with unrestricted communication.

This value must contain only alphanumeric characters

and - with no other punctuation or spaces.

Enter the cluster datacenter name/local domain []: mydomain

NOTE: You can specify below to join automatically an existing

cluster. Specifying y will start cluster locally and

attempt to join existing cluster by connecting to

specified IP address. Cluster service must be started on

remote host and must be in healthy state.

If unsure, say n, you will have a chance to do this later.

Are you joining existing cluster? (y/n) [y]:

Please provide numeric IP adress for existing cluster member: 192.168.1.2

Detected interfaces: eth0

Enter interface you would like to configure: eth0

Use DHCP? (y/n): n

Enter the static IPv4 in CIDR notation (e.g. 10.0.1.2/24): 192.168.1.3/24

Enter the static IPv4 gateway (e.g. 10.0.1.1): 192.168.1.1

Enter the DNS server addresses (separate values with ,): 192.168.1.1

Use automated TLS (Lets Encrypt?) (y/n): y

Enter e-mail address to use for requesting TLS certificate: helpdesk@example.com

==> Please review the entered information

Network interface: eth0

Use DHCP: n

Static IPv4: 192.168.1.2/24

Static gateway: 192.168.1.1

DNS servers: 192.168.1.1

Joining existing cluster: 192.168.1.1

Are these values OK? (y/n): y

==> Applying configuration

acme... ok

network settings... ok

basic settings... ok

joining cluster... ok

- Management port should be set individually on each node, if required. Application accelerator (caching) has to be disabled if using management port.

[ntkadmin@appserver-2/mydomain]$ ntk cfg setc nas.mgmt_port 8443

[ntkadmin@appserver-2/mydomain]$ ntk cfg setc :nas.caching 0

- Apply settings by restarting appliance.

[ntkadmin@appserver-2/mydomain]$ ntk sys reboot

- Verify that the nodes have successfully synced.

[ntkadmin@appserver-2/mydomain]$ ntk cluster nodes

Node Status IP Address Data Center

appserver-1 alive 192.168.1.2 mydomain

appserver-2 alive 192.168.1.3 mydomain

- Start application on node.

[ntkadmin@appserver-2/mydomain]$ ntk as start

...

Further steps

To get the service fully up and running here is the list of steps required:

- VRRP configuration, if built in VRRP is used - please consult Virtual Router Redundancy Protocol (VRRP) section.

- Authentication server configuration - upload license, configure required services, onboarding requirements and user sources. Manual is available when logging on to administration dashboard.

- Notakey Authentication Proxy configuration - please consult Authentication Proxy section.

Appliance Upgrade

Subsections below describe various upgrade scenarios.

RancherOS upgrade

The following commands should be run as user rancher. Limited user that can be created during installattion does not have the necessary privileges to perform OS upgrade/downgrade.

From: https://rancher.com/docs/os/v1.2/en/upgrading/

If RancherOS has released a new version and you want to learn how to upgrade your OS, it can be easily done with the ros os command.

Since RancherOS is a kernel and initrd, the upgrade process is downloading a new kernel and initrd, and updating the boot loader to point to it. The old kernel and initrd are not removed. If there is a problem with your upgrade, you can select the old kernel from the Syslinux bootloader.

RancherOS version control

Use command below to get your current RancherOS version.

$ sudo ros os version

v1.5.6

RancherOS available releases

Use command below to get available RancherOS versions.

$ sudo ros os list

rancher/os:v1.5.6 local latest

rancher/os:v1.5.5 local available running

rancher/os:v1.5.4 remote available

rancher/os:v1.5.3 remote available

rancher/os:v1.5.2 remote available

RancherOS upgrade to the latest release

Use command below to upgrade your RancherOS to current version.

$ sudo ros os upgrade

Upgrading to rancher/os:v1.5.6

Continue [y/N]: y

...

...

...

Continue with reboot [y/N]: y

INFO[0037] Rebooting

RancherOS upgrade/downgrade to a specific version

If you’ve upgraded your RancherOS and something’s not working anymore, you can easily rollback your upgrade, just pick it up after -i option. If there is the new RancherOS version available but not listed yet, you can upgrade by manually picking it up after -i option.

$ sudo ros os upgrade -i rancher/os:v1.5.7

Upgrading to rancher/os:v1.5.7

Continue [y/N]: y

...

...

...

Continue with reboot [y/N]: y

INFO[0082] Rebooting

RancherOS user docker version change

User docker service is responsible for running all NtkAA services. Whenever you are running docker command directly from cli you are interfacing with this service. RancherOS vendor is recommending against docker version changes without OS upgrade so perform this operation at your own discretion and risk.

Use command sudo ros engine list -u to discover available docker versions.

Use command sudo ros engine switch docker-19.03.11 to update to specific version.

4.2.x with single node.

Below are steps required to migrate previous generation appliance to current version.

Save backup with

ntk backup save /home/ntkadmin/migrate.backup.Copy backup to your PC with:

scp ntkadmin@myip:/home/ntkadmin/migrate.backup ~/

Halt 4.2 version appliance with

ntk sys shutdown.Install new appliance as described here.

Configure network identically as in previous version.

Run wizard during installation and configure node name and datacenter as specified in 4.2 appliance.

Copy backup file to new appliance

scp ~/migrate.backup rancher@myip:~/.Load backup with

ntk backup load /home/rancher/migrate.backupStart the required services manually (e.g.

ntk as start).

4.2.x multinode

Steps below describe process of removal of single previous version node from cluster and joining a new version appliance in place.

Mixed version configuration, when some nodes in cluster are running older version, is generally not an issue, provided that docker images are regularly updated.

Update the node you plan to replace to latest versions with

ntk sys update.Save backup with

ntk backup save /home/ntkadmin/migrate.backupon the node you plan to replace.Copy backup to your PC with:

scp ntkadmin@myip:/home/ntkadmin/migrate.backup ~/

Halt 4.2 version appliance with

ntk sys shutdown.Verify that cluster availability has not changed with

ntk cluster healthon other node in cluster.Install new appliance as described here.

Configure network identically as in previous version.

Run wizard during installation and configure different (unique) node name and identical datacenter.

Join node to cluster during wizard setup.

Copy backup file to new appliance

scp ~/migrate.backup rancher@myip:~/.Load backup with

ntk backup load /home/rancher/migrate.backup --skip-net --skip-cluster. This will restore only local non-network configuration.Start the required services manually (e.g.

ntk as start).Verify that services have started successfully (

ntk <service> status).

Configuration Management

Commands in section ntk cfg provide unified interface for configuration management.

Command reference

ntk cfg getc

Usage:

ntk cfg getc [<cfg_key>]

ntk cfg getc [<cfg_key>] --batch

ntk cfg getc :

Description:

Retieve a configuration key. Configuration keys can be hierarchical, meaning that keys can contain subkeys.

Enter parent key to get overview on child configuration keys and values. Keys with colon (:) prefix are stored in cluster, so to

query them cluster has to be started and readable. Use two semicolons (::) to retrieve configuration values from

cluster and, if value is not present, fall back to locally stored value. Batch param –batch allows piping output

to file without color formatting. Using ntk cfg getc : and specifying : as key param allows inspecting all cluster configuration keys.

ntk cfg setc

Usage:

ntk cfg setc <cfg_key> <value>

ntk cfg setc <cfg_key> <file> --load-file

ntk cfg setc <cfg_key> <value_struct> --json-input

Description:

Set configuration key to specified value. Configuration keys can be hierarchical, meaning that keys can contain subkeys.

Option –json-input can be used to populate child keys, parent value will be taken form specified

It is possible to add standard emojis to configuration values, for example, to generate authentication requests. Appliance stores all configuration strings using UTF-16 encoding internally, so codes for emoji should be formatted as UTF-16 character pairs and must be used in a valid combination. No character escaping is necessary.

Here is an example of configuring :sunglasses: emoji for NtkAP:

ntk cfg setc ap.message_description "Hello {0}, ready to do some off-site work \uD83D\uDE0E ?"

Partial vs Full Configuration Keys

You can retrieve values using their full key (e.g. node.name) or you can

retrieve parts of the configuration key by specifying a partial key (e.g. just

node for all the values underneath it).

# Example illustrating that configuration values can be retrieved

# using both full and partial keys.

# Full key

$ ntk cfg getc node.name

NotakeyNode1

# Full key

$ ntk cfg getc node.datacenter

DefaultDC

# Partial key will retrieve all keys underneath it

$ ntk cfg getc node

{

"name": "NotakeyNode1",

"datacenter": "DefaultDC"

}

# Store key in cluster configuration

$ ntk cfg setc :sso.host sso.example.com

sso.example.com

# Get key from cluster configuration

$ ntk cfg getc :sso.host

sso.example.com

# Store key locally and retrieve with fallback to local value

# If there is a cluster stored value, it will override local value

$ ntk cfg delc :sso.host

$ ntk cfg setc sso.host mysso.example.com

sso.example.com

$ ntk cfg getc ::sso.host

mysso.example.com

# Save boolean value in test.boolkey

$ ntk cfg setc test.boolkey true --json-input

true

# Check for saved value

$ ntk cfg getc test

{

"boolkey": true

}

# Save integer value in test.intkey

$ ntk cfg setc test.intkey 98467 --json-input

98467

# Check for saved value

$ ntk cfg getc test

{

"boolkey": true,

"intkey": 98467

}

Setting Nested Values (--json-input)

An important parameter to know is --json-input, which is sometimes necessary

for setting values. It directs the configuration engine to interpret the configuration

value as JSON values. This allows setting multiple nested values at once.

By default, values will be interpreted as a string or a number. This is usually fine. However, when using a partial configuration key, it may override values nested below. For example:

# Example of how node.name and node.datacenter

# values can be overridden by accident.

$ ntk cfg setc node { "name": "NodeName", "datacenter": "NodeDC" }

{ name: NodeName, datacenter: NodeDC }

# Without --json-input, the value was

# interpreted as one long string

$ ntk cfg getc node

{ name: NodeName, datacenter: NodeDC }

# The nested value node.name does not exist

# anymore

$ ntk cfg getc node.name

Alternatively, if we use --json-input, then the values are set hierarchically:

# Note the use of single quotes around

# the value

$ ntk cfg setc node '{ "name": "NodeName", "datacenter": "NodeDC" }' --json-input

{

"name": "NodeName",

"datacenter": "NodeDC"

}

# Multi-line output hints that the value

# is not a simple string anymore

$ ntk cfg getc node

{

"name": "NodeName",

"datacenter": "NodeDC"

}

$ ntk cfg getc node.name

NodeName

Loading Values From a File (--load-file)

You can load values from a file by passing the --load-file argument

to ntk cfg setc.... The value argument will be interpreted as a path to file,

and the contents of this file will be used as the real configuration value.

ntk cfg setc <key> <path_to_file> --load-file

$ echo "Hello World" > /tmp/value_file

$ ntk cfg setc test_value /tmp/value_file --load-file

Hello World

$ ntk cfg getc test_value

Hello World

The parameter is useful when setting these configuration keys, which are cumbersome to set directly:

| Key | Description |

|---|---|

| :ssl.pem | HTTPS certificate in PEM format |

| :ssl.key | Certificate key in PEM format |

ntk cfg migrate

Usage:

ntk cfg migrate <cfg_key_src> <cfg_key_dst>

Description:

Retrieve configuration key and store into destination key. Use :keyname for global cluster backed configuration keys and keyname without any prefix for local persistent configuration. If source key is empty, destination value will be effectively deleted.

Task Scheduler

Commands in ntk cron enable or disable running of maintenance jobs in subscribed services. This must be enabled on at least one of nodes in cluster and can be safely enabled on all nodes.

Services like NAS, SSO subscribe to incoming events in cron and run their maintenance jobs as needed in daily / hourly / weekly and mothly schedule.

Command reference

ntk cron enable

Usage:

ntk cron enable

Description:

Enables cron task processing globally on node. Full node restart required.

ntk cron disable

Usage:

ntk cron disable

Description:

Disables global cron task processing on node.

ntk cron run

Usage:

ntk cron run

Description:

Allows you to debug tasks processing. Output will show tasks that will be run and their output.

Reverse Proxy

Commands in ntk rp section manage Reverse Proxy configuration and status. Reverse Proxy handles all incoming HTTP(S) requests and routes them to corresponding providers.

Configuration settings

The following configuration settings can be configured:

| Key | Description | Default |

|---|---|---|

| :ssl.acme.status | On/off flag. When on - TLS certificates will be created and auto-renewed, using Let’s Encrypt. When off, TLS certificates must be set via the ssl.pem and ssl.key configuration values. |

off |

| :ssl.acme.email | When using Let’s Encrypt TLS certificates, this will be sent as the contact address. | - |

| :ssl.acme.mode | When using Let’s Encrypt TLS certificates, you can use either HTTP-01 (config value http) or TLS-01 methods (config value tls). Default is http. | http |

| :ssl.pem | Default TLS certificate (in PEM format). This is used only when ssl.acme.status is not on. You can set this value directly. It should begin with -----BEGIN CERTIFICATE----- and have newlines escaped as \n. This value usually requires certification chain, so there can be multiple certificates, starting from intermediate. |

- |

| :ssl.key | Default TLS certificate key (in PEM format). This is used only when ssl.acme.status is not on. You can set this value directly. It should begin with -----BEGIN PRIVATE KEY----- and have newlines escaped as \n. |

- |

| :ssl.acme.mgmt_enable | On/off flag. Enable management interface TLS certificate acquired from ACME. If ACME is used and this is not enabled, management will use self signed certificate. | off |

| :ssl.acme.ca | ACME CA URL. Default value is production URL (https://acme-v02.api.letsencrypt.org/directory), use staging https://acme-staging-v02.api.letsencrypt.org/directory for tests. | |

| ssl.acme.debug | Set to "true” to enable ACME certificate provisiosning debuging. This is a local setting. | - |

| :rp.custom_params | Key value pairs of custom configuration options, e.g. key1=value,key2=value. |

- |

| :rp.extra_domains | Additional domains for local custom services. Comma seperated values e.g. “myapp.example.com,other.example.com”. HTTP TLS certificate will be requested from ACME for all domains listed here. | - |

Change settings using ntk cfg setc command.

Command reference

ntk rp restart

Usage:

ntk rp restart

Description:

Stops and start Reverse Proxy. Use this command to apply configuration changes.

ntk rp start

Usage:

ntk rp start [--force]

Description:

Starts Reverse Proxy. Use –force option to skip TLS certificate validation matching configured DNS hostname e.g. useful during initial setup.

ntk rp stop

Usage:

ntk rp stop

Description:

Stops Reverse Proxy. Service will be stopped and will not be started until ntk as start is called. The

stopped state persists across appliance restarts.

ntk rp resettls

Usage:

ntk rp resettls

Description:

Resets all ACME TLS settings and starts certificate acquisition and validation from scratch.

ntk rp status

Usage:

ntk rp status [--short]

Description:

Shows status of all Reverse Proxy components. Use –short to display only status (useful for scripting).

ntk rp validate

Usage:

ntk rp validate

Description:

Validates Reverse Proxy configuration, checks TLS certificate validity, cluster and networking configuration.

Authentication Server

Commands in ntk as section govern Notakey Authentication Server (NtkAS) state and configuration. Notakey Authentication Server consists of three distinct

parts - device API used for interacting with mobile clients, management API used for managing services and sending authentication requests and finally

management browser based user interface. When installing appliance all components are activated and available on https port of appliance

Configuration settings

The following configuration settings must be present to start this service.

| Key | Description | Default |

|---|---|---|

| :nas.host | External FQDN for authentication API service. | - |

| nas.mgmt_port | Set port number, used for management, see below for details. | 443 |

| nas.auto_usersync | Set to 1 to enable automatic user sync for all services from external user repository sources (e.g. LDAP and ActiveDirectory). | 0 |

| :nas.update_notification | Set to 0 to disable automatic update notifications for Notakey Authentication Server for all users in Administration Service | 1 |

| :nas.caching | Set to 0, to disable caching (caching will not work correctly in load balanced environments). | 1 |

| :nas.cache_ttl | Set lifetime of cached entries in seconds. | 3600 |

| :nas.redis_url | URL for REDIS application accelerator distributed cache. Add multiple (sharded) servers divided by , e.g. redis://redis1:6379/0,redis://redis2:6379/0 |

- |

| :messenger_url | Set URL used for push notification and SMS sending. Set to off to disable push notifications. | https://messenger.notakey.com |

| nas.healthcheck | Set to “off” to disable internal service consistency check. Use only if experiencing issues with service becoming unhealthy due unhandled spikes in load. | on |

| :nas.healthcheck_interval | Interval in seconds between health checks | 10 |

| :nas.healthcheck_timeout | Timeout in seconds for health probe | 10 |

| :nas.auth.ttl | API access token lifetime in seconds. When token expires, new has to be requested. | 7200 |

| :nas.ks.pubkey_ttl | Lifetime in seconds for keys used for encryption. | 3600 |

| :nas.log.retention.auth_log | Days to store authentication log in cluster datastore. Use syslog audit endpoint for long term storage. | 30 |

| :nas.log.retention.onboarding_log | Days to store onboarding log in cluster datastore. Use syslog audit endpoint for long term storage. | 90 |

| :nas.log.retention.auth_request | Days to store processed, signed authentication requests. | 30 |

| :nas.phone_country_code | Phone number prefix to attempt to combine with user source provided number to get a valid number in international format. If phone numbers already are in international format, this setting is ignored. | 371 |

| :nas.syslog | IP address or hostname to send technical logs. For high volume nodes, IP address is recommended. | - |

| :nas.syslog_level | Loglevel number to enable logging for. All events in this log level or above will be sent to syslog. The available log levels are: debug, info, warn, error, fatal, and unknown, corresponding to the log level numbers from 0 up to 5, respectively. | 3 |

| :auth_domain | Domain of authentication server (as configured using DNS SSD). If none is specified, fallback value is used based on main host (ntk cfg get :nas.host). | http://<host> |

| :ssl.nas.pem | TLS certificate (in PEM format). This is used only when ssl.acme.status is not on. You can set this value directly. It should begin with -----BEGIN CERTIFICATE----- and have newlines escaped as \n. This value usually requires certification chain, so there can be multiple certificates, starting from intermediate. |

- |

| :ssl.nas.key | TLS certificate key (in PEM format). This is used only when ssl.acme.status is not on. You can set this value directly. It should begin with -----BEGIN PRIVATE KEY----- and have newlines escaped as \n. |

- |

| :nas.custom_params | Key value pairs of custom configuration options, e.g. key1=value,key2=value. |

- |

| :nas.secret_key_base | Management session cookie encryption key (generated automatically on first run). | - |

| :nas.external_proxy | Set to “on” to expose NtkAS directly to external proxy / load balancer. Service will run on tcp/5000. | off |

| :nas.expose_mgmt_api | Set to “off” to disable access to management API over the regular HTTPS port. | on |

| :nas.dual_instance_mode | Set to “on” to enable legacy dual container mode, where management runs on seperate container. If :nas.external_proxy is enabled, service will run on tcp/6000. |

off |

Change settings using ntk cfg setc <Key> <value> command.

Running management dashboard on non-default port

It is possible to configure management dashboard on port other than default 443, that is used for device API. To set this to

different port run e.g. ntk cfg setc nas.mgmt_port 8443, so that management data is separated from payload data. By default all management

APIs are also published on public HTTPS endpoint. Configure ntk setc :nas.expose_mgmt_api off followed by NtkAS restart to disable public management API access.

Remote management API consumers will have to use specified management port for management operations (e.g. creating users, sending authentication requests).

HTTPS certificate for management is set with ntk cfg setc :ssl.pem <cert> and ntk cfg setc :ssl.key <key>. ACME certificate configuration on non default port is not possible.

Command reference

ntk as restart

Usage:

ntk as restart

Description:

Stops and start Notakey Authentication Server. Use this command to apply configuration changes.

ntk as start

Usage:

ntk as start [--force]

Description:

Starts Notakey Authentication Server. Use –force option to skip TLS certificate validation matching configured DNS hostname e.g. useful during initial setup.

ntk as stop

Usage:

ntk as stop

Description:

Stops Notakey Authentication Server. Service will be stopped and will not be started until ntk as start is called. The

stopped state persists across appliance restarts.

ntk as status [–short]

Usage:

ntk as status

Description:

Shows status of all Notakey Authentication Server components.

ntk as validate

Usage:

ntk as validate

Description:

Validates Notakey Authentication Server configuration, checks TLS certificate validity, cluster and networking configuration.

ntk as auth

Usage:

ntk as auth add <client name> [<client ID> <client secret>]

ntk as auth del <client ID>

ntk as auth scopes <client ID> "<scope1 scope2 scope3>"

ntk as auth ls

Description:

Manages M2M client credentials, that can be used to manage resources using APIs provided by Notakey Authentication Server.

See Notakey API integration documentation for details how to establish authentication session using these credentials.

Use ntk as auth add to add a new API consumer cient. Client ID and client secret params are optional and will be generated automatically if not provided.

To define access permissions use ntk as auth scopes command. Scopes are defined per client and have to be requested for every session explicitly.

Refer to Notakey API integration documentation for details.

ntk as report

Usage:

ntk as report services

ntk as report users <service ID>

ntk as report devices <service ID>

Description:

Exports tab seperated report of configured NAS services, users and devices. Use ntk as report services to first discover the “service ID” parameter for users and devices

commands.

ntk as bootstrap

Usage:

ntk as bootstrap

Description:

Provision authentication server with initial root application, admin team, single admin user and license. Use this wizard if no direct web manangement UI is accesible during install or setting up the service to be run through external load balancer.

[root@node/dc1]$ ntk as bootstrap

NOTE: This command provisions Notakey Authentication Server. All further

configuration must be done using web UI, which is running

either on standard https port or one specified in mgmt_port

config entry.

Please enter admin username: admin

Please enter admin password:

Retype new password:

Do you wish to disable 2FA for root service? (y/n): y

Provide full path to P12 licence file: /home/ntkadmin/license.p12

Proceed with recovery? (y/n): y

...

ntk as recoveradm

Usage:

ntk as recoveradm

Description:

Recovers admin user access to root administration service and enables onboarding for administration service. Any active existing administrators will receive notification of this change.

[root@node/dc1]$ ntk as recoveradm

NOTE: This command recovers admin user access to root administration

service by adding new admin user, enabling onboarding for

administration service and optionally changing 2FA requirement

for administrator access. Any active existing administrators will

receive notification about this change.

Please enter admin username: admin

Please enter admin password:

Retype new password:

Do you wish to disable 2FA for root service? (y/n): y

Proceed with recovery? (y/n): y

...

Single Sign-On Server

Notakey Single Sign-On Server (NtkSSO) is a SAML, OAuth 2.0 and various other authentication protocol capable solution for web and mobile authentication workflows based on SimpleSAMLphp (SSP) project.

SSP Commands in ntk sso section govern NtkSSO state and configuration.

Configuration settings

| Key | Type | Description |

|---|---|---|

| :sso.host | string | FQDN by which will be used by users to access SSO service (e.g. sso.example.com). If ssl.acme.status is on, then this should be a publicly accessible address. |

| :sso.base.“admin.protectindexpage” | bool | Do not allow direct access to admin startpage without authentication, true / false value. |

| :sso.base.“admin.protectmetadata” | bool | Do not allow direct anonymous access to metadata used by service providers, true / false value. |

| :sso.base.“auth.adminpassword” | string | Sets local admin password, allows acess to metadata and diagnostics. |

| :sso.base.errorreporting | bool | Enables user error reporting form when problems occur, true / false value. |

| :sso.base.technicalcontact_name | string | Contact name for error reporting form. |

| :sso.base.technicalcontact_email | string | Email where to send error reports from error reporting form. |

| :sso.base.debug | bool | Enable debugging of authentication processes. |

| :sso.base.showerrors | bool | Enable or disable error display to end user (in form of backtrace). |

| :sso.base.“logging.level” | integer | Sets log level, integer value from 0 to 7, 7 being most verbose, used with debug. |

| :sso.base.“theme.use” | string | Sets custom theme, string value in format “module:theme”. |

| :sso.base.“session.duration” | integer | Maximum duration of authenticated session in seconds, default 8 hours. |

| :sso.base.“session.cookie.name” | string | Option to override the default settings for the session cookie name. Default: ‘NTK-SSO’ |

| :sso.base.“session.cookie.lifetime” | integer | Expiration time for the session cookie, in seconds. Defaults to 0, which means that the cookie expires when the browser is closed. Default: 0 |

| :sso.base.“session.cookie.path” | string | Limit the path of the cookies. Can be used to limit the path of the cookies to a specific subdirectory. Default: ‘/’ |

| :sso.base.“session.cookie.domain” | string | Cookie domain. Can be used to make the session cookie available to several domains. Default: nil |

| :sso.base.“session.cookie.secure” | bool | Set the secure flag in the cookie. Set this to TRUE if the user only accesses your service through https. If the user can access the service through both http and https, this must be set to FALSE. Default: FALSE |

| :sso.base.“language.default” | string | Sets default ISO 639-1 language code for UI language. |

| :sso.base.“trusted.url.domains” | array | Array of domains that are allowed when generating links or redirections to URLs. simpleSAMLphp will use this option to determine whether toto consider a given URL valid or not, but you should always validate URLs obtained from the input on your own (i.e. ReturnTo or RelayState parameters obtained from the $_REQUEST array). Set to NULL to disable checking of URLs. simpleSAMLphp will automatically add your own domain (either by checking it dynamically, or by using the domain defined in the ‘baseurlpath’ directive, the latter having precedence) to the list of trusted domains, in case this option is NOT set to NULL. In that case, you are explicitly telling simpleSAMLphp to verify URLs. Set to an empty array to disallow ALL redirections or links pointing to an external URL other than your own domain. Example: [‘sp.example.com’, ‘app.example.com’]. Default: NULL |

| :sso.base.“authproc.idp” | hash | Objects containing applied filters during authentication process, see below for details. |

| :sso.auth | hash | Contains objects that describe access to user repositories. At least one user repository must be configured. Please see below for details. |

| :sso.“saml-sp” | hash | Objects describing all service provider (SP) metadata - these are web applications that consume the identity assertions. Please see below for details. |

| :sso.“saml-idp” | hash | Objects describing names for this IdP. Only one IdP must be defined. Please see below for details. |

| :sso.certificates | hash | Objects describing all certificates used by SSO e.g. used for SAML assertion signing. Please see below for details. |

| :sso.modules | array | List of enabled modules. Only required modules should be enabled as this affects performance. Please see below for details. |

| sso.healthcheck | string | Set to off to disable internal healthcheck. To be used with caution in cases when using external load balancer. |

| :sso.healthcheck_interval | Interval in seconds between health checks | 10 |

| :sso.healthcheck_timeout | Timeout in seconds for health probe | 10 |

| :sso.cron | hash | Automatic task configuration. |

| :sso.cron.mode | string | Set to “http” to enable legacy cron mode, useful for sso versions prior 3.0.0. |

| :ssl.sso.pem | string | TLS certificate (in PEM format). This is used only when ssl.acme.status is not on. You can set this value directly. It should begin with -----BEGIN CERTIFICATE----- and have newlines escaped as \n. This value usually requires certification chain, so there can be multiple certificates, starting from intermediate. |

| :ssl.sso.key | string | TLS certificate key (in PEM format). This is used only when ssl.acme.status is not on. You can set this value directly. It should begin with -----BEGIN PRIVATE KEY----- and have newlines escaped as \n. |

| :sso.custom_params | string | Key value pairs of custom configuration options (cluster value with fallback to local node value), e.g. key1=value,key2=value. |

| :sso.external_proxy | string | Set to “on” to expose NtkSSO directly to external proxy / load balancer. Application will be reachable on tcp/7000. |

Most configuration entries support storage in cluster, so prefix entries with : to share configuration between nodes. Exception is sso.image, that defines version of SSO image and if installing custom version, you will need to configure this on each node individually.

Change settings using ntk cfg setc command. Use ntk cfg setc :sso.<key> <true|false> --json-input format for boolean, integer and json structure values (hash and array) and ntk cfg setc :sso.<key> "<value>" string values. See examples below on

implementation details for structure value settings.

Hashed passwords

For production use, any local passwords should be entered as hashes improving security. To generate password hashes use the following command in NtkAA cli terminal:

docker exec -it sso /var/simplesamlphp/bin/pwgen.php

Enter password: mystrongpassword

$2y$10$kQ/zxPQyfkb/bDPoKJ2es.7CRf5FGb2N5mwethSaKM.u5SWz62bFq

Administration access

To access various administration helper tools and inspect configured service providers you will need to access management interface.

This can be done by accessing url https://

To configure access to management interface you have to define :sso.auth.admin authentication source.

Authentication with static password:

# define auth source

ntk cfg set :sso.auth.admin '{"module": "core:AdminPassword"}' --json-input

# generate password hash

docker exec -it sso /var/simplesamlphp/bin/pwgen.php

# enter generated hash into configuration

ntk cfg set :sso.base.\"auth.adminpassword\" '$2y$10$kQ/zxPQyfkb/bDPoKJ2es.7CRf5FGb2N5mwethSaKM.u5SWz62bFq'

# restart sso service

ntk sso restart

Authenticate with Notakey Authentication server module:

# define auth source

ntk cfg set :sso.auth.admin '{

"module": "notakey:Process",

"endpoints": [

{

"name": "SSO Admin Login",

"url": "https://ntk-api.host/",

"service_id": "bc...00",

"client_id": "...",

"client_secret": "c7...53",

"profile_id": "b3...29", // optional

"service_logo": "https://ntk-api.host/_cimg/b5..04?size=300x150"

}

]

}' --json-input

# restart sso service

ntk sso restart

See SSP documentation on authentication source configuration.

Userlogos directory

Any static file assets like images, certificates and custom css files can be stored in userlogos directory. Usually it is located in /home/ntkadmin/userlogos/, but can be changed by editing /opt/notakey/cfg/.env file. Contents of this folder will be synced automatically over to other nodes, potentially overwriting older contents. See “ntk sso sync” for more details.

Basic service configuration

Basic settings

$ ntk cfg setc :sso.host sso.example.com

sso.example.com

$ ntk cfg setc :sso.base.technicalcontact_name "Example.com support"

Example.com support

$ ntk cfg setc :sso.base.technicalcontact_email support@example.com

support@example.com

$ ntk cfg setc ':sso.base."auth.adminpassword"' "myadminpass"

myadminpass

Configuring user repository access

NAS user repository

$ ntk cfg setc :sso.auth.ntkauth '{ "module": "notakey:Process",

"endpoints": [

{

"name": "SSO service",

"url": "https://demo.notakey.com/",

"service_id": "bc...00",

"client_id": "a0...162",

"client_secret": "secret",

"profile_id": "b3d...529",

"service_logo": "https://demo.notakey.com/_cimg/b5d3d0f7-e430-4a30-bd34-cea6bab9c904?size=300x150"

}

]}' --json-input

Description:

Configure a user repository from Notakey Authentication Server.

Description of parameters:

| Key | Type | Mandatory | Description |

|---|---|---|---|

| endpoints.?.name | string | yes | Name can be any string and will be shown on authentication request in title field. |

| endpoints.?.url | string | yes | Service ID is the value issued after service creation and shown in NtkAA admin dashboard. |

| endpoints.?.service_id | string | yes | Service ID of NtkAS, can be found on service page as “Access ID”. |

| endpoints.?.client_id | string | yes | NtkAS management API OAuth client ID. |

| endpoints.?.client_secret | string | yes | NtkAS management API OAuth client secret. |

| endpoints.?.profile_id | string | no | NtkAS authentication profile ID to use for this endpoint. |

| endpoints.?.service_logo | string | no | Relative or full URL to logo of this service. Use ~/userlogos directory to store local images. |

| endpoints.?.stepup-source | string | no | Authentication source (from :sso.auth.xyz, where xyz is the authentication source name) to use for stepup authentication after 2FA verification. Usually some form of password verification. |

| endpoints.?.stepup-duration | string | no | ISO 8601 formatted duration of how long the stepup authentication is valid, for example P30D for 30 days. |

| user_id.attr | string | no | User attribute in filter mode to use for authentication. This attribute value will be used as NtkAS service username that will receive authentication request. |

LDAP based user repository

$ ntk cfg setc :sso.auth.myauth '{ "module": "ldap:LDAP",

"hostname": "ldaps://192.168.1.2",

"enable_tls": true,

"attributes": [

"sAMAccountName",

"cn",

"sn",

"mail",

"givenName",

"telephoneNumber",

"objectGUID"

],

"timeout": 10,

"referrals": false,

"dnpattern": "sAMAccountName=%username%,OU=MyOU,DC=example,DC=com"}' --json-input

Description:

Configure a user repository from LDAP, in this example from Microsoft Active Directory. Username matching is done by sAMAccountName attribute, but this could be configured as any other unique attribute. “search.attributes” defines which attributes are used for user ID matching.

SSO HTTP Certificate Configuration

To configure static TLS certificates for SSO HTTPS follow these steps:

Use scp or similar utility to transfer certificate and key files to appliance.

Use

ntk cfg setc :ssl.sso.pemandntk cfg setc :ssl.sso.keyto load data from files into appliance configuration as shown below:

ntk cfg setc :ssl.sso.pem /home/ntkadmin/ssossl.pem --load-file 1> /dev/null

ntk cfg setc :ssl.sso.key /home/ntkadmin/ssossl.key --load-file 1> /dev/null

- Ensure that ACME certificates are disabled (

ntk cfg getc :ssl.acme.statusreturns “off”).

Configuring Identity Provider

Typical ID provider configuration

$ ntk cfg setc :sso.\"saml-idp\".\"sso.example.com\" '{

"host": "__DEFAULT__",

"privatekey": "server.key",

"certificate": "server.pem",

"auth": "myauth"

}' --json-input

Description:

Configures an IdP with defined certificate and selected user repository. If multi-tenant mode is used, multiple IdPs can be configured and selection will be done

by HTTP host header matching to “host” configuration parameter. Fallback value is DEFAULT as in configuration above. The key and certificate has to be set with

command ntk cfg setc sso.certificates.server.key and ntk cfg setc sso.certificates.server.pem respectively. Name for certificate can be any valid filename.

Configuring service providers

Generic Google Gapps configuration

$ ntk cfg setc :sso.\"saml-sp\".\"google.com\" '{

"AssertionConsumerService": "https://www.google.com/a/example.com/acs",

"NameIDFormat": "urn:oasis:names:tc:SAML:1.1:nameid-format:emailAddress",

"simplesaml.attributes": false,

"startpage.logo": "gapps.png",

"startpage.link": "http://mail.google.com/a/example.com",

"name": "Google Gapps"

}' --json-input

Description:

Google Gapps for business customers can be configured to authenticate to remote SAML IdP. Here is the server side configuration for this setup.

Use ntk cfg delc sso.\"saml-sp\".\"google.com\" to delete configured SP.

Amazon AWS console configuration

$ ntk cfg setc :sso.\"saml-sp\".\"urn:amazon:webservices\" '{

"entityid": "urn:amazon:webservices",

"OrganizationName": { "en": "Amazon Web Services, Inc." },

"name": { "en": "AWS Management Console" },

"startpage.logo": "aws-logo.png",

"startpage.link": "https://sso.example.com/sso/saml2/idp/SSOService.php?spentityid=urn:amazon:webservices",

"OrganizationDisplayName": { "en": "AWS" },

"url": { "en": "https://aws.amazon.com" },

"OrganizationURL": { "en": "https://aws.amazon.com" },

"metadata-set": "saml20-sp-remote",

"AssertionConsumerService": [ {

"Binding": "urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST",

"Location": "https://signin.aws.amazon.com/saml",

"index": 1,

"isDefault": true

} ],

"SingleLogoutService": "",

"NameIDFormat": "urn:oasis:names:tc:SAML:2.0:nameid-format:transient",

"attributes.required": [ "https://aws.amazon.com/SAML/Attributes/Role", "https://aws.amazon.com/SAML/Attributes/RoleSessionName" ],

"keys": [ {

"encryption": false,

"signing": true,

"type": "X509Certificate",

"X509Certificate": "MIIDbTCCAlWgAwIBAgIEKJJQLzANBgkqhkiG9w0BAQsFADBnMR8wHQYDVQQDExZ1\ncm46YW1hem9uOndlYnNlcnZpY2VzMSIwIAYDVQQKExlBbWF6b24gV2ViIFNlcnZp\nY2VzLCBJbmMuMRMwEQYDVQQIEwpXYXNoaW5ndG9uMQswCQYDVQQGEwJVUzAeFw0x\nNzA2MDYwMDAwMDBaFw0xODA2MDYwMDAwMDBaMGcxHzAdBgNVBAMTFnVybjphbWF6\nb246d2Vic2VydmljZXMxIjAgBgNVBAoTGUFtYXpvbiBXZWIgU2VydmljZXMsIElu\nYy4xEzARBgNVBAgTCldhc2hpbmd0b24xCzAJBgNVBAYTAlVTMIIBIjANBgkqhkiG\n9w0BAQEFAAOCAQ8AMIIBCgKCAQEAnmVupB5TWNR4Jfr8xU0aTXkGZrIptctNUuUU\nfA5wt7lEAgoH7JeIsw7I3/QYwfA0BkGcUxLiW4sj7vqtdXwLK5yHHOg3NYsN2wCU\noSnsw/msH7nyeyUXXRr9f+dyJRRViBnlaJ3RHNk6UZEbS/DJG/W4OMlthdzIXJbA\n0G0xQid96lUZOgeLuz1olWiAIbJRPqeBRcVlY8MvHzVE82ufvSgkGnMZAt6B4Q6T\n9PsIDdhRDbTBTtUAmaKF3NXl/IPq5eybM6Pw3gcVlsrtgq0LHsdhgRdMz6UeBaA9\nTn/dgmeWM+UKEXkHbtnsq6q9X+8Nq/HwGylI/p3cPZ8WuOjXeQIDAQABoyEwHzAd\nBgNVHQ4EFgQU7F4XurLqS7Bsxj8NKcA9BvHkEE0wDQYJKoZIhvcNAQELBQADggEB\nAJItHBnpD62VaOTSPqIzVabkpXWkmnclnkENAdndjvMcLJ3qrzqKb3xMQiEGCVzF\ni9ZXU3ucMAwufIoBaSjcuFNYT1Wuqn+Jed2xH5rUOAjeDndB7IjziU5kyW+6Zw9C\nFmSN8t0FFW2f3IALgWfrrJ6VWPzen9SJrNPmVralau66pDaZbGgpBkVi2rSfJiyM\nleau9hDNBhV+oPuvwDlbceXOdmJKUTkjyID9r+CmtHq7NL6omIH6xQw8DNFfa2vY\n17w2WzOcd50R6UUfF3PwGgEyjdiigWYOdaSpe0SWFkFLjtcEGUfFzeh03sLwOyLw\nWcxennNbKTXbm1Qu2zVgsks="

} ],

"saml20.sign.assertion": true,

"authproc": {

"91": {

"class": "core:AttributeAdd",

"https://aws.amazon.com/SAML/Attributes/Role": [ "arn:aws:iam::540274555001:role/SamlRoleAdmin,arn:aws:iam::540274555001:saml-provider/NTKSSO" ]

},

"92": {

"class": "core:PHP",

"code": "$attributes[\"https://aws.amazon.com/SAML/Attributes/RoleSessionName\"][0] = $attributes[\"uid\"][0];"

}

}

}' --json-input

Description:

Example above shows a more complex scenario where SP requires specific attributes present in assertion. The role SamlRoleAdmin therefore has to be created in AWS IAM module configuration. RoleSessionName in this case is simply copied from user ID value, a more elaborate setup would be required in production environment.

Configuring filters

Setup additional 2FA

$ ntk cfg setc :sso.base.\"authproc.idp\" '{

"90": {

"class": "notakey:Filter",

"user_id.attr": "sAMAccountName",

"endpoints": [

{

"name": "Mobile ID validation",

"url": "https://demo.notakey.com/",

"service_id": "bcd05d09-40cb-4965-8d94-da1825b57f00",

"client_id": "zyx",

"client_secret": "xyz",

"service_logo": "https://demo.notakey.com/_cimg/b5d3d0f7-e430-4a30-bd34-cea6bab9c904?size=300x150"

}

]

}

}' --json-input

Description:

Once you have configured a user repository as authentication backend in Identity Provider configuration, it is possible to add additional checks to authentication flow. Example above shows adding a NtkAA based backend for ID validation. Key “user_id.attr” is used to discover matching user in NtkAA, in this case sAMAccountName from Active Directory. It is possible to use additional modules and filters for languange provisioning with service providers, attribute mapping and alteration, etc.

Managing certificates

Adding a certificate

$ ntk cfg setc :sso.certificates.server '{

"description": "Demo certificate",

"pem": "-----BEGIN CERTIFICATE-----\nMIIDdDCCAlwCCQC30UW2PPlkQjANBgkqhkiG9w0BAQsFADB8MQswCQYDVQQGEwJM\nVjETMBEGA1UECBMKU29tZS1TdGF0ZTENMAsGA1UEBxMEUmlnYTEQMA4GA1UEChMH\nTm90YWtleTEWMBQGA1UEAxMNbm90YWtleS5sb2NhbDEfMB0GCSqGSIb3DQEJARYQ\naW5mb0Bub3Rha2V5LmNvbTAeFw0xNzAzMjExMjM4MzZaFw0yNzAzMTkxMjM4MzZa\nMHwxCzAJBgNVBAYTAkxWMRMwEQYDVQQIEwpTb21lLVN0YXRlMQ0wCwYDVQQHEwRS\naWdhMRAwDgYDVQQKEwdOb3Rha2V5MRYwFAYDVQQDEw1ub3Rha2V5LmxvY2FsMR8w\nHQYJKoZIhvcNAQkBFhBpbmZvQG5vdGFrZXkuY29tMIIBIjANBgkqhkiG9w0BAQEF\nAAOCAQ8AMIIBCgKCAQEA5L/rjXT0dEBmN3BSnsmLCwF9goOZWb5RNvvc+dnUQnaG\n5nJ8xMPSpEAm4y2ZlC8Uhm56H+7A2cUf/ogkUcKkyQHyXQrjjPzec5h3+734Mmit\nvHAAzC/j9smDOvdde9evMUDH9Sn7oJtpz+MKckTFvsBoLGuBPiuLn8P9HCmLKlMN\njR2hfJab+DZG+eysAGkoiGS9zOxMVF9Xsvk/EHxm5p3ZPF2oOEr8cimgQO/ew29+\nfF4etwskre/AA8KCw/6Bg2XwZ3HmFPJ9nd/zJSzqm5U96WFEth4ABVqZE4MS4rRo\n2K+eQy2rXzDnmiL3cGiLuL9aal+9JCFRxKE1RSIdEQIDAQABMA0GCSqGSIb3DQEB\nCwUAA4IBAQC7T9Yqu0+ONT/MP8ZNmbUs5B6+/S0yIreLC1pMmJymxMDIFQCnxeRf\naxDtDfEJVzkiMAl2Q3q/HApCDh/l+hpbn4oKoDXTQvWiZpOWhSR/QcOfpuPGnk09\nmhcZIbI5WSYfsPQbXpu02mnmanXgl7Pt4teQGdQBTq0cO4xj7FOdEIcxD8WAmF9E\ntS+DhrNoOaaR2mwicHZ5+cfMKviNWw3qmolDWZKm8tIZTU21+lU+LyB6uMwCgQ/y\nCZG4eY0O+CKlzn6nlsOLgpKhZDtiNgPN3eGcIHfw+oxCECH6de/Ewuk3Co6Thnh1\nRu6Lt+g/od2IYvKl0R6efBTeuwozoubJ\n-----END CERTIFICATE-----\n",

"key": "-----BEGIN RSA PRIVATE KEY-----\nMIIEowIBAAKCAQEA5L/rjXT0dEBmN3BSnsmLCwF9goOZWb5RNvvc+dnUQnaG5nJ8\nxMPSpEAm4y2ZlC8Uhm56H+7A2cUf/ogkUcKkyQHyXQrjjPzec5h3+734MmitvHAA\nzC/j9smDOvdde9evMUDH9Sn7oJtpz+MKckTFvsBoLGuBPiuLn8P9HCmLKlMNjR2h\nfJab+DZG+eysAGkoiGS9zOxMVF9Xsvk/EHxm5p3ZPF2oOEr8cimgQO/ew29+fF4e\ntwskre/AA8KCw/6Bg2XwZ3HmFPJ9nd/zJSzqm5U96WFEth4ABVqZE4MS4rRo2K+e\nQy2rXzDnmiL3cGiLuL9aal+9JCFRxKE1RSIdEQIDAQABAoIBAHmnb/itKIzi6vm0\n7NuxyBa0VjGhF19ZDgw16pGePXqTWq8YWC61DkN4MrZDPBhI6ZuNCboN2dZ3NcrC\nUL6Cy+xy8ph1AAutOAk2HyltIKB+d1duIZ52IcDP7tDfWYJRdMS29SD3kPEbdiyv\nTJD07k3COiTVj8imk/0F2Iivt2lr/GLyPSKFckNzCC3Tfz7pCaq2+JBxt08QtZPl\nqL81or7+BpcUNgmZyA1WLZsMpoYeXX6fsndUrVcfZIayaIXjX9NJYxQuwW44EYxq\nhBRS+goxXo+xQSKCSEuPf36pc82sxUiNEszAOd5Iqjrl1pRVVoLFdVLXFaQ0f0J8\nq7PXjYECgYEA/mhXuoTQuVsJvqCfZSLs/bZnJotSi9na4oOmZBp+LokRzHYbmchm\nSjX1scc9fm5MMOe82WAUMBYZhBVJrjqk/rQvGBMpRqut6JiujpSDR27xAcpJD79P\ngBre3PSDJ84koI8PTyGTcPTa3RqY5+5Iv4JsFPFCshFGMI/ldwRxickCgYEA5i52\nuSyMohMrAfHyS3IG1c/bGrhSZ7O790nUakEGpwi8paD/qi4klb5LYmJLQbaZOKes\nh6voXQ3Zhvd0j6sXzi2d802OlB9LIq+FcctiiS3wR1YykbAq3RDAfYMEEnSdRnp3\noz8i3l1y/a1bP3RnjSmeaMVG/nITFlfMbfCEnQkCgYBemQvt9g7qrVhdQrqiT69R\n0+5dHbcu+23xhkRrupIq2Zr9rPksYKDwfUoDtfM+vOKl2LWXGqvHCaCpRYUlPPc3\nImbUi+NwPMwozgUyTTTXbgA9yysJqPh1yQgPnvfZ6EQkU628nd6GRPXQ+1/Z9fel\nBmkMDH3hWpz/17HaZJOXSQKBgETamUEDBn5k5XSLf0L6NPk4V/5CLMRAi3WJbDTs\nhqTohCW3Z0Ls0pzIc5xWctSRXnwIDB/5WGSdg/hPhVqEf3Z5RspE5OWCBuO1RWGo\nySznxPxR2Iaj/+5o2GuzCUDMCU/PyoHWnQOPSJqBhM4Sb/dV/8CvYnEyhmskkE5C\nqCihAoGBAJr0fISYmFtX36HurmFl+uCAcOPXy6qEX3Im6BA6GiNGmByk+qFVP5e9\nLRjX7LouGlWyPridkODdWAwCTxn1T7FcGFRZlK0Ns3l8Bp+FMznXX05TlkNZpC6X\nV9F0GVQtrwsos605Z8OG4iMRkn+3Qk6FZ8QnlBX807killRcGlC4\n-----END RSA PRIVATE KEY-----\n"

}' --json-input

Description:

Adds a certificate to be used in SSO configuration. Certificate data have to be line delimited with \n character and may contain —–BEGIN and —–END comments. Refer to certificate later in configuration with <name>.key for key and <name>.pem for certificate. After

every change in certificate store SSO service must be restarted. To delete a certificate use ntk cfg delc sso.certificates.server. Refer to these certificates as “server.pem” and “server.key” elsewhere in configuration, where server is the unique certificate name.

Enabling modules

Typical module configuration

$ ntk cfg setc :sso.modules '[ "cron", "startpage", "ldap", "notakey" ]' --json-input

Description:

Configure a list of enabled modules. User repositories and authentication filters define which modules are required. For basic setup with LDAP (or Active Directory)

the list above is sufficient. Follow each change with ntk sso restart.

Plugins

It is possible to configure additional custom modules for NtkSSO to customize authentication workflow or add custom templates. All plugins must be bundled as docker images that provide code and template files to the main container. See Notakey Examples for skeleton project that can be used to build plugin images.

To configure plugin add plugin to modules array in format [module name]:[repository path]:[image tag].

$ ntk cfg setc :sso.modules '[ "cron", "startpage", "ldap", "notakey", "mytheme:myorg/sso-mytheme:latest" ]' --json-input

Adding service provider logos

Use scp to copy logo files to /home/ntkadmin/userlogos directory. Files can be in any format, PNG with transparent background preferred, use 250x80px for optimal scaling.

$ scp mylogo.png ntkadmin@ntk.example.com:/home/ntkadmin/userlogos/

You can use URL https://sso.example.com/userlogos/mylogo.png later as startpage logo for required SP.

Command reference

ntk sso restart

Usage:

ntk sso restart

Description:

Stops and starts SSO service. Use this command to apply configuration changes.

ntk sso start

Usage:

ntk sso start [--force] [--update]

Description:

Starts SSO service. Use –force option to skip TLS certificate validation matching configured DNS hostname e.g. useful during initial setup. Use –update to update custom plugin modules, if any configured. If specified, new images will be loaded from configured remote repository.

ntk sso stop

Usage:

ntk sso stop

Description:

Stops SSO service. Service will be stopped and will not be started until ntk sso start is called. The

stopped state persists across appliance restarts.

ntk sso validate

Usage:

ntk sso validate

Description:

Validates SSO service configuration.

ntk sso status

Usage:

ntk sso status

Description:

Shows status of SSO service.

ntk sso sync

Usage:

ntk sso sync [--delete-missing]

Description:

Pulls newer content and publishes any locally added or edited files to cluster datastore. Location of local directory is defined in /opt/notakey/cfg/.env as ASSETS_DIR. Usually this is /home/ntkadmin/userlogos directory. Adding –delete-missing removes missing files that are stored in cluster, but missing locally. Any files exceeding 350kB in size will be ignored and must be copied manually to other nodes.

Authentication Proxy

Commands in ntk ap section control Notakey Authentication Proxy.

Configuration settings

The following configuration settings must be present to start this service.

| Key | Description | Default | Possible values |

|---|---|---|---|

| :ap.vpn_port_in | Incoming RADIUS service port that the Notakey Authentication Proxy listens for inbound requests from VPN access gateways. | 1812 | 1-65000 |

| :ap.vpn_port_out | RADIUS server port where Notakey Authentication Proxy will send upstream requests. | 1812 | 1-65000 |

| :ap.vpn_radius_address | RADIUS server hostname or IP address that Notakey Authentication Proxy forwards the requests. Specify multiple hosts by seperating them by :, e.g. “192.168.0.1:192.168.0.2” | (none) | string |

| :ap.vpn_secret_in | CHAP secret for incoming requests from VPN access gateways. | (none) | string, max 16 characters |

| :ap.vpn_secret_out | CHAP secret for outgoing requests to the RADIUS server. | (none) | string, max 16 characters |

| :ap.vpn_access_id | Application Access ID as seen on Dashboard Application settings page. | (none) | string |

| :ap.vpn_message_ttl | Authentication message time to live time in seconds. Should match the RADIUS timeout value of VPN gateway. | 300 | integer |

| :ap.message_title | Authentication message title. Title is shown in application inbox for each authentication message, e.g. “VPN login to example.com” | “VPN authentication” | string |

| :ap.message_description | Authentication message contents. Message is shown in push notification and inbox. Use {0} as a placeholder for username. | “Allow user {0} VPN login?” | string |

| :ap.proxy_receive_timeout | RADIUS message internal receive timeout. | 5 | integer |

| :ap.proxy_send_timeout | RADIUS message internal send timeout. | 5 | integer |

| :ap.api_provider | Backend API provider. Do not configure if using local instance. URL needs to be in format https://host/api | <local instance> | string |

| :ap.api_timeout | Backend API call request timeout. | 5 | integer |